Educators strive to improve and maximize the learning outcomes of their students by applying effective instructional practices. Although no instructional approach is universally effective, some teaching practices are more effective than others. Therefore, it is important to reliably identify and prioritize the most effective instructional practices for populations of learners. Rigorous experimental research is generally agreed to be the most reliable approach for identifying “what works” in education. However, it is important to recognize that scientific research has important limitations and does not always generate valid findings.

In large-scale replication projects in psychology and other fields, researchers often failed to replicate the findings of previously conducted studies (e.g., Klein et al., 2018; Open Science Collaboration, 2015), casting doubt on the validity of research findings. Researchers, including those in education, have also reported using a variety of questionable research practices such as p-hacking (exploring different ways to analyze data until desired results are obtained), selective-outcomes reporting (only reporting analysis with desired results), and hypothesizing after results are known (HARKing; e.g., Fraser et al., 2018; John et al., 2012; Makel et al., 2019), all of which increase the likelihood of false positive findings (Simmons et al., 2011). Moreover, research studies in education often involve relatively small and underpowered samples that do not adequately represent the population being studied, which further threatens the validity of study findings. Finally, most published research lies behind a paywall, inaccessible to many practitioners and policymakers (Piwowar et al., 2018), which reduces the potential application and impact of research. Open science and crowdsourcing are two related developments in research that aim to address these issues and improve the validity and impact of research.

Open science is an umbrella term that includes a variety of practices aiming to open and make transparent all aspects of research with the goal of increasing its validity and impact (Cook et al., 2018). For example, preregistration involves making one’s research plans transparent before conducting a study in order to discourage questionable research practices such as p-hacking and HARKing by making them easily discoverable. Data sharing is another key open-science practice, which allows the research community to verify analyses reported in an article, as well as analyze data sets in other ways to examine the robustness of reported findings, thereby serving as a protection against p-hacking. Materials sharing involves openly sharing materials used in a study (e.g., intervention protocols, intervention checklists) to enable other researchers to replicate the study as faithfully as possible. Finally, open access publishing and preprints provide free access to research to anyone with internet access, thereby democratizing the benefits and impact of scientific research beyond those with institutional subscriptions to the publishers of academic journals.

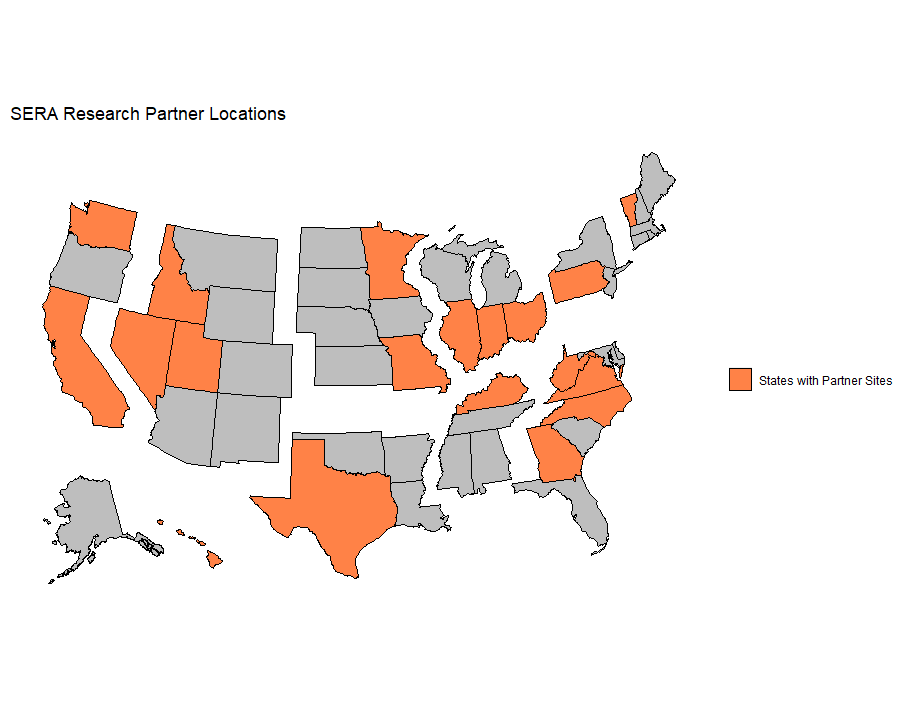

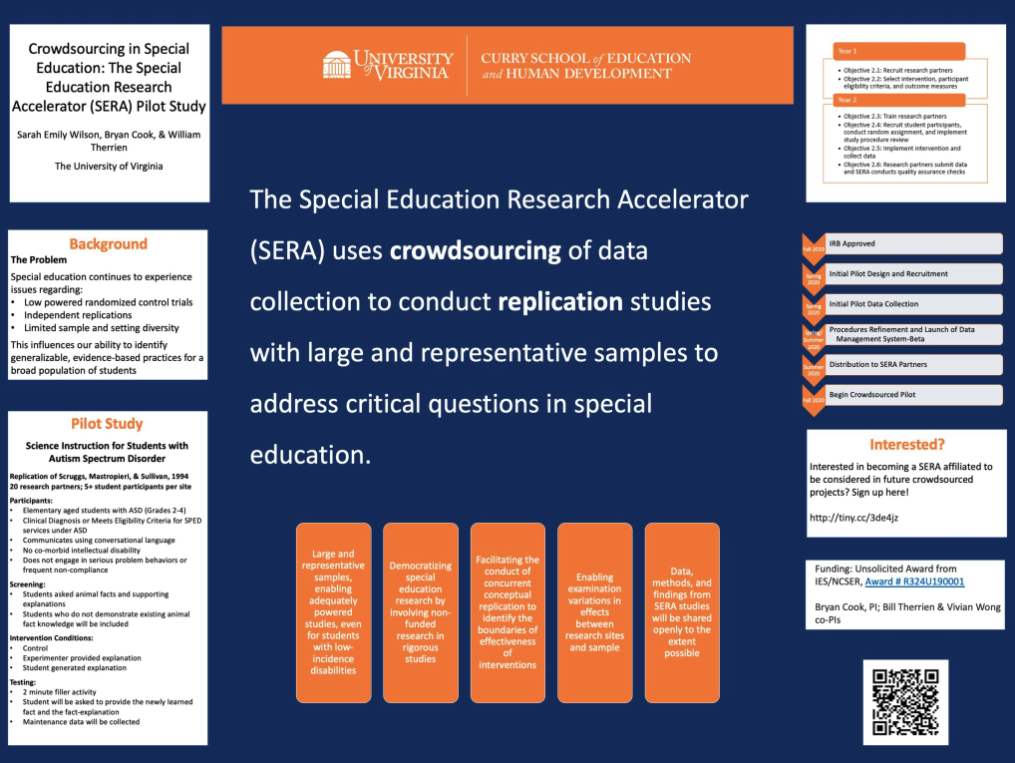

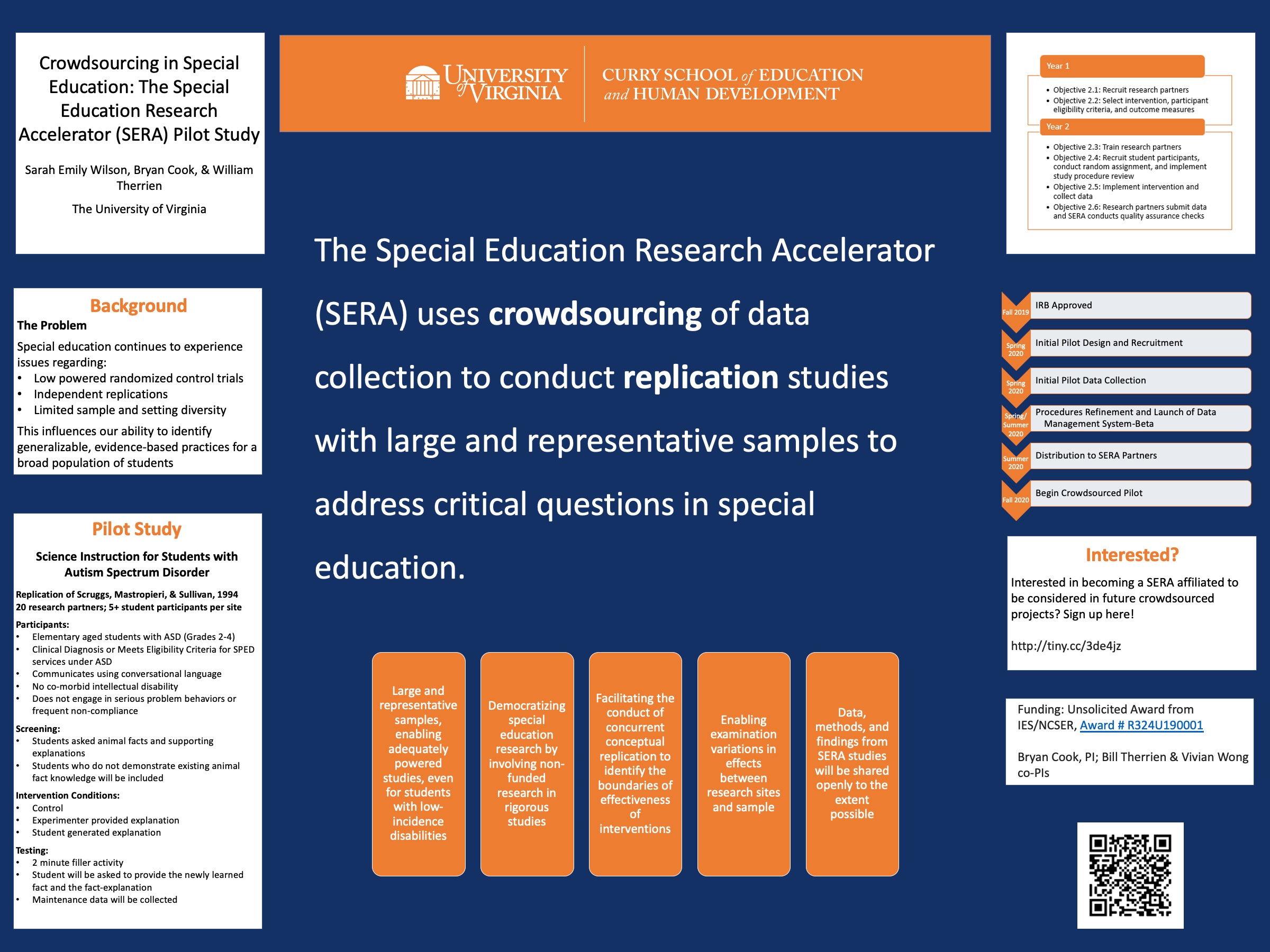

Crowdsourcing in research involves large-scale collaboration of various elements of the research process and can take many forms (Makel et al., 2019). For example, multiple research teams might conduct independent studies examining the same issues, as the Open Science Collaboration (2015) did when they conducted 100 replication studies to examine reproducibility of findings in psychology. Crowdsourcing in research has also occurred by multiple analysts analyzing the same data set to answer the same research question in order to examine the effects of different analytic decisions on study outcomes (Silberzahn et al., 2018). The most frequent application of crowdsourcing in research, which we have adopted in the Special Education Research Accelerator, is to involve many research teams in the collection of data, thereby increasing the size and diversity of the study sample – which serves to improve the study’s power and external validity. For example, Jones et al. (2018) involves > 200 researchers collecting data from > 11,000 participants in 41 countries to evaluate facial perceptions. Regardless of how it is employed, “crowdsourcing flips research planning from ‘what is the best we can do with the resources we have to investigate our question?’ to ‘what is the best way to investigate our question, so that we can decide what resources to recruit?” (Uhlmann et al., 2019, p. 417).

Although open science and crowdsourcing are independent constructs (i.e., research that is open is not necessarily crowdsourced, and crowdsourced studies are not necessarily open), the two approaches are closely aligned and complementary. The ultimate goal of both open science and crowdsourcing is to improve the validity and impact of research. Although using different means to achieve this goal, crowdsourcing facilitates making research open, and open science facilitates crowdsourcing. For example, in a crowdsourced study in which data are collected by many research teams, study procedures have to be determined and disseminated to collaborating researchers before the study is begun. Because study procedures are determined and documented prior to conducting the study, they can readily be posted as a preregistration. Additionally, if data are being collected across many researchers in a crowdsourced study, the project will need a clear data-management plan to enable data to be collected and entered reliably across researchers. Such well-organized data-management plans that include metadata not only facilitate the integration of data across researchers on the project, but also make data more readily usable by other researchers when shared and reduce the burden of creating supporting metadata when sharing. Moreover, because data are collected across many different researchers in many crowdsourced studies, individual researchers may be less likely to feel that they “own” the data and therefore may feel less reluctant to share them. Similarly, materials used in crowdsourced studies must have been developed and shared with the many researchers collecting data, so – like data – materials from crowdsourced research are ready to be uploaded and shared. Most broadly, crowdsourcing and open science share an ethos of collaboration and sharing for the betterment of science, and we conjecture that most researchers involved in crowdsourced studies will want to make their research as open as possible. As such, we look forward to making our crowdsourced research through the Special Education Research Accelerator as open as possible.

Bryan G. Cook, Ph.D.

Principal Investigator

Bryan G. Cook, Ph.D., is a Professor in Special Education at UVA with expertise in standards for conducting high-quality intervention research in special education, replication research in special education, and open science. He co-directs, with Dr. Therrien, the Consortium for the Advancement of Special Education Research (CASPER) and is an ambassador for the Center for Open Science. He is Past President of CEC’s Division for Research, chaired the working group that developed CEC’s (2014) Standards for Evidence-Based Practices in Special Education, coedits Advances in Learning and Behavioral Disabilities, and is coauthor of textbooks on special education research and evidence-based practices in special education. Cook plays an integral role in developing infrastructure and supports for SERA, conducting the pilot study, and assessing the usability and feasibility of using SERA to conduct future replication pilot studies.